As a society, we have a complicated relationship with social media. When we hear about online bullying, misinformation and hate speech, we rightly condemn the ugly shadow it casts on society. But when Russia bans Facebook in an attempt to control the narrative around Ukraine, it feels as if a fundamental human right is being taken away.

Our confusion is due to a conflict of values: freedom of expression vs freedom from harm. And it is one of the most important debates of our time. But it is also a debate that has become overly simplified, polarised and tribal.

What are the two sides of this debate?

One side is the freedom of speech absolutists who want no rules. They have fuelled the rise of new social media platforms, such as Gab and Donald Trump’s “Truth Social”, which have no limits on what you post. They have quickly descended into white nationalism, anti-semitism, and QAnon conspiracy theories.

The other side is looking for greater state regulation of social media. This has resulted in new initiatives such as Google Fact Check, Good Information Inc and Trusted News Summit, which seek to verify what is true. This side often underestimates the risks of granting a state’s powers to police the internet. Examples of how this can go wrong are social media crackdowns by political regimes looking to control the free flow of information.

Using Meta (I use “Facebook” to distinguish the specific social media platform) as an example, I will examine both sides of the debate – and I propose a solution aimed to resolve the values conflict.

Facebook under fire

Nearly a quarter of the world’s population is on Facebook, making it the most popular social media platform in the world.

But the credibility of their stated mission, to “build community and bring the world closer together” has recently come under fire.

Whistleblowers have accused Facebook of knowingly choosing profit over society; they argue that, in promoting misinformation, Facebook is diminishing the integrity of the democratic process. Furthermore, it is argued that social media misinformation has weakened society’s ability to respond effectively to the pandemic.

Mark Zuckerberg responded with the following:

“The idea that we allow misinformation to fester on our platform, or that we somehow benefit from this content, is wrong.”

Facebook’s defence is they have more fact-checking partners than any other tech platform. Their efforts are certainly impressive, removing over 5 million pieces of hateful content every month.

Facebook will also point you towards their community standards, which lists 4 types of content they may take action on:

- Violent and criminal behaviour

- Safety concerns (such as bullying, child abuse, exploitation)

- Objectionable content, such as porn and hate speech

- Inauthentic content (such as misinformation)

Of these, there is little controversy over points 1 to 3. There is broad agreement that Facebook should act to prevent harm from violence, bullying and hate speech.

It is point 4, misinformation, which is controversial.

The rise of misinformation

It sometimes feels like we are primed to simultaneously believe everything and nothing.

And this feeling is not new.

In 1835, the New York Sun newspaper claimed there was an alien civilisation on the moon. It sold so well that it established the New York Sun as a leading publication.

However, targeted misinformation as a form of psychological control has only increased with the rise of digital communication.

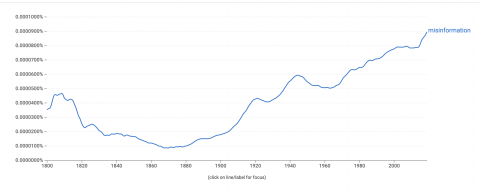

Google Ngram viewer, which measures the frequency with which words appear in books, shows a steep increase in our usage of the word “misinformation”. To understand this, we should understand how misinformation spreads.

To understand this, we should understand how misinformation spreads.

Facebooks algorithms are optimised for engagement – this often promotes content that elicits a strong emotive response. What is important to the algorithm is the engagement, not the veracity of the information being shared.

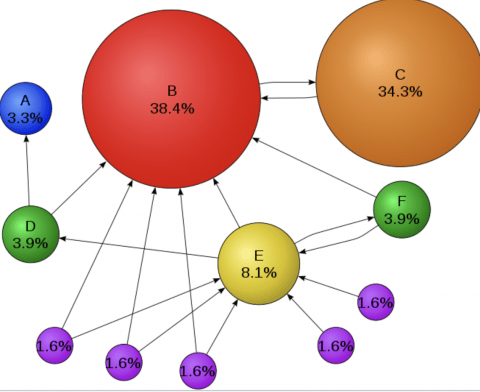

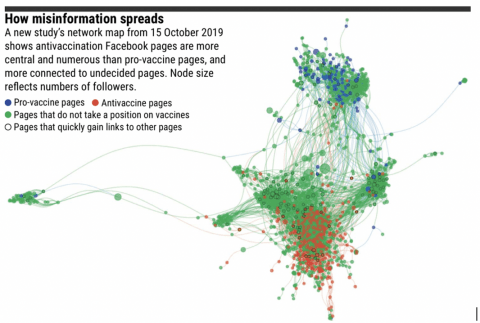

Interestingly, misinformation does not travel far when there are few echo chambers. Content will travel as far as a user who disagrees with it, who subsequently fact checks it and reveals it as misinformation.

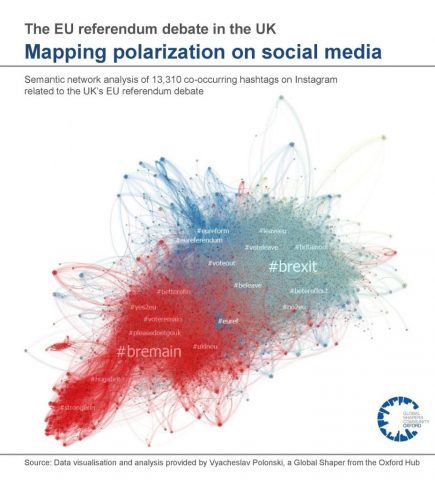

However, online groups are increasingly segregated by ideological beliefs. In these bubbles, misinformation can travel far through the network without being challenged.

Below is a visual representation of the echo chambers from the UK’s EU referendum debate:

Confirmation bias is our tendency to seek out and believe information that aligns with our existing views. It is known by psychologists that once someone’s mind is made up, it is difficult to change it. So instead, misinformation aims to exploit algorithms, echo chambers and confirmation bias to amplify and strengthen existing views. This can lead to extremism.

This is particularly problematic when it spills out into the real world.

Examples of the real-world impact of misinformation:

- The 2021 Capitol attacks, with roots in the QAnon conspiracy theory

- Disrupted elections in countries including Ghana, Nigeria, Tongo, Tunisia and Holland

- Lack of uptake in Covid-19 vaccines linked to a variety of misinformation sources

- The deaths at Charlottesville, fuelled by right-wing extremist forums

- ‘Pizzagate’ shootings, also with roots in QAnon

Furthermore, misinformation has been found to undermine our trust in institutions and spread false science, which impacts public health and disrupts the democratic process.

So, what action should be taken?

The argument against censorship

In order to combat the harms of misinformation, advocates are arguing for greater state intervention to regulate social media and to remove misinformation.

For example, the United Nations chief, Secretary-General Antonio Guterres, called for global rules to regulate social media content.

“I do not think that we can live in a world where too much power is given to a reduced number of companies. We need a new regulatory framework with rules that allow for that to be done in line with law.”

~ United Nations chief, Secretary-General Antonio Guterres

It is hard to disagree with the sentiment behind this statement. Without appropriate checks, the power of tech companies could balloon beyond our control.

However, before we grant powers to bodies that will allow them to decide what information we consume, we should consider the potential downsides.

Firstly, censoring social media posts may be a violation of Article of the Humans Right Act, which states:

“Everyone has the right to freedom of expression. This right shall include freedom to hold opinions and to receive and impact information and ideas without interference by public authority.”

~ Article 10 of the Human Rights Act

Interestingly, the UK Government is currently looking to reform the Human Rights Act, replacing them with a “Bill of Rights”.

One proposed change is that an individual’s human rights could be violated if it “safeguards the broader public interest” (page 56, Human Rights Reform). This change may allow for greater censorship of misinformation by the state.

A second argument against censorship of misinformation is that it’s open for abuse by political regimes looking to centralise power. The power to decide what is – and what is not – misinformation could lead to a clampdown on protest, collective action and political activism.

Could the Arab Spring have happened if Twitter and Facebook had been policed by the authorities?

Whilst the role of social media in the Arab Spring is likely overplayed by journalists, careful academic analysis has found that social media has indeed led to an increase in positive collective action in countries with oppressive governments.

Furthermore, we can look at the track record of countries that have banned social media platforms:

- China

- Russia

- Iran

- North Korea

All of these countries are listed in the “Index of Freedom in the World” as having some of the worst civil liberties in the world. I am not arguing that they have poor civil liberties because they do not allow social media, rather observing that states who try to control information online tend to have bad records when it comes to human rights.

Even if state censorship is not the solution, we do have a problem.

Trust in traditional news sources is quickly evaporating. Into this vacuum rushes misinformation, creating a wind strong enough that it has blown away our grip on what is true. This has harmed society through vaccine hesitancy, increased extremism and the disruption democratic processes.

But a knee-jerk reaction, with handing over to the state the power to decide what is true, could create greater harm than misinformation.

And anyway, it is likely a violation of human rights.

Challenges to Facebook’s solution

Facebook’s aim is to prevent regulation by governments through self-regulation.

Their strategy to tackle misinformation includes:

- Applying machine learning to detect misinformation

- Response teams to fact change flagged content

- Partnering with third-party fact-checking organisations to verify content

However, Facebook themselves will admit limitations to this largely automated approach. For example, how would an automated program determine the difference between satirical websites and intentional misinformation?

In addition, vast amounts of clickbait exist for the sole purpose of turning clicks into advertising revenues. These often use templated sites that look like official news websites with enticing headlines, often tricking viewers into clicking.

These sites are not misinformation, but neither are they trusted sources of information.

Between satire and clickbait, there are gossip columns and opinion journalism. This is “legitimate” media that make no claims of objectivity. For example, Ben Shapiro, a right-wing American media commentator, openly admits that his news website, The Daily Wire, exists not to report the news but to ‘win a culture war’. The spin and exaggeration typical of these publishers is a grey area for automated programs looking for misinformation.

Mark Zuckerberg has recently said that “Facebook does more to address misinformation than any other company”. He may be right. But is it enough?

A proposed solution

We have seen how the solution to misinformation is a conflict of values: freedom of expression vs freedom from harm. Any solution must seek to balance the two.

I offer a potential solution only as a thought experiment – after all, it’s easier to pick holes in the work of others and much harder to suggest something yourself. Admittedly, this is not my area of expertise and any suggestions will be impractical at best!

With that caveat said, I would propose three points:

- Do not seek to censor misinformation in an individual’s social media posts

- Promote authoritative content over engagement algorithmically

- Limit echo chambers

1. Not censoring misinformation in individual’s posts

This protects an individual’s human rights. It also safeguards activists who wish to use social media to mobilise against oppressive political regimes.

But this is not freedom of speech at all costs.

Facebook should continue to take down content encouraging violent behaviour, bullying, exploitation and hate speech.

2. Promoting authoritative content over engaging content

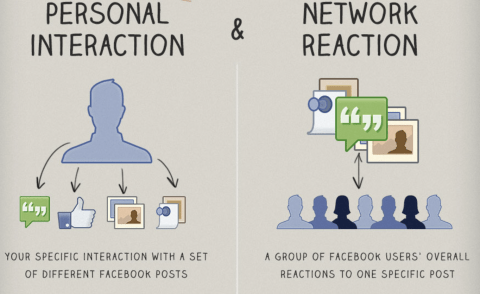

Facebook’s “EdgeRank” algorithm determines what content is shown in your news feed and in which order.

It seeks to personalise your feed through a combination of your personal interests with how the wider community has interacted with a particular post.

Facebook’s algorithm promotes content with high engagement. Often, this works well and you see posts from friends and family, alongside viral memes and cat videos. However, the same mechanism also promotes emotive content, which is often divisive or untrue.

The algorithm, however, could be adapted to work in a similar way to Google’s PageRank algorithm.

Google promotes authoritative – as opposed to engaging – content. It does this by analysing how many times other websites reference a piece of content in the form of hyperlinks or “back-links”. For example, me referring you to this detailed breakdown of how Google’s PageRank algorithm works is an indicator of that page’s trustworthiness.

When an individual posts an article from a website onto Facebook, Facebook does not necessarily need to fact-check the website. Instead, it could algorithmically determine the authority of the website in a similar manner to Google.

If the news source is authoritative, such as the BBC, it would be prioritised in news feeds over misinformation, spam or clickbait.

Critically, this process is decentralised.

No one individual has decided that the BBC is authoritative. Instead, this conclusion is the result of crowdsourcing.

Millions of individual websites have referenced the BBC, much like an authoritative scholar receives citations if their work is legitimate. Therefore, the risks of centralised control are minimised.

This proposed solution would diminish the reach of misinformation without the need for Facebook to become the police of the internet.

So why has Facebook not tried something like this already?

Well, it would diminish their advertising revenues. Promoting authoritative content over engaging content would make your news feed a bit… well, boring. This means less time spent on the platform, fewer clicks and less advertising revenue.

There is also the small issue that Google owns the intellectual property of PageRank. Facebook would need to develop its own algorithm that could deliver similar outcomes, but have sufficiently different methods that avoid IP infringement.

I did say these were just thought experiments limited by impracticality!

3. Limit echo chambers

Research suggests that misinformation does not travel far where there are fewer echo chambers. Furthermore, group polarisation theory suggests that echo chambers move entire groups into more extreme positions.

This suggests that maybe the misinformation isn’t the problem – maybe it’s echo chambers…

In a network with fewer clusters of segregated users, misinformation travels less far and has a diminished impact on those it reaches. The problem is the phrase “cluster of segregated users” is a technical way of saying “the community”!

Community is at the heart of Facebook, yet it is also what is driving polarisation.

So, removing echo chambers is not viable, but steps could be taken to limit their harm.

These steps could include:

- Facebook could provide a feature for users to turn off the personalisation in our news feeds so we can see what others are consuming, algorithm-free.

- Facebook could flag highly polarised or partisan content and provide options to view it alongside content from the opposing viewpoint

- Verification badges could be placed on content that complies with the journalism code of conduct

Furthermore, education could be provided to help individuals step outside of their own echo chambers. We have a responsibility to seek “disconfirmation”.

It is not natural for us, but to be an informed citizen means to consume information that we may not necessarily agree with.

We all would benefit from being a little less certain.

Summary

Social connections form the fabric of our society. But our very tendency to form like-minded communities can be exploited, leading to misinformation and division. The siren call of censorship may seem an appealing solution, but it may lure us towards a rock we are better off avoiding.

Removing claims outside of the consensus may seem desirable, but is likely to make the problem worse. Censorship may even make people less trusting of authority, pushing the more malicious views underground where it could spread.

Instead, we should seek decentralised solutions.

Solutions that minimise the harm of misinformation whilst safeguarding our fundamental human rights – the right to freedom of expression.