“Wow, did you take that? Wait a minute – that was done by a professional photographer…?”

That was the reaction from Jake, our MD, when I showed him the image below: a photorealistic image generated by OpenAI’s DALL·E 2, created within seconds, using a prompt I entered for “a macro photo of a butterfly on a sunflower”.

It’s a photo that looks so real and has captured the sharpness and colour of a butterfly in the foreground and the soft focus of the sunflower in the background. In fact, so much so, that you’d be hard pressed to find many people that can find any obvious tell tail signs, artefacts or a sense of wrongness, which can be common in AI-generated imagery.

This was the moment I realised we have entered the point at which advanced AI systems can understand and create photorealistic images that are, in many cases, indistinguishable from real life. This has interesting implications for anyone working in the creative and digital industries, or indeed any role that involves image sourcing or image manipulation.

In this article, I explore some practical applications of using OpenAI’s DALL·E 2 within the creative and digital marketing world and how DALL·E 2’s recently upgraded editing tools can be used to support creative work.

What is DALL·E 2?

OpenAI’s DALL·E 2 is an AI-based image generator that takes a simple text based prompt and generates images based on the AI’s understanding of that prompt. Within seconds, you’ll get four image variations generated which can then be downloaded, shared or saved as a favourite within your OpenAI account.

DALL·E 2 is now available to everyone. You get 15 free credits per month, with 1 credit required per generation and every 115 credits costing $15. You can generate images from scratch or upload your own photos to manipulate providing they follow the DALL·E 2 Content Policy and, according to the terms of use, you “may use Generations for any legal purpose, including for commercial use.”

This makes attribution an interesting subject and how much credit should be given to the person providing the prompts, the image source when editing an image or DALL·E 2. All the images I’ve used in this blog post, for example, I either generated with DALL·E 2 or generated a modified stock library image but I would feel somewhat a fraud if I attributed these generations or modifications to myself.

Images can be generated based on:

- Subject matter: you can generate images of anything you want; landscapes, animals, objects, abstract concepts just as long as they follow the content policy which for example bans generating images of famous people to avoid the proliferation of deepfakes.

- Medium: from pencil sketches and oil paintings through to pixel art and digital illustrations, DALL·E 2 can generate images representing any kind of medium.

- Environmental settings: add to the prompt environmental factors such as “sunset” or “fog” to give your images a bit of atmosphere.

- Location: if you need to place your images in a particular location, give the prompt a city or country and you should get landmarks, building styles etc associated with that place.

- Artistic style: DALL·E 2 can generate images in the style of different artists. Just add “in the style of [artist]” to your prompts.

- Camera settings: particularly useful for photographic styles, you can add camera setups to your requests to give photos a variety of different shots and qualities. Examples include “Macro 35mm shot”, “long exposure” or “fisheye lens”.

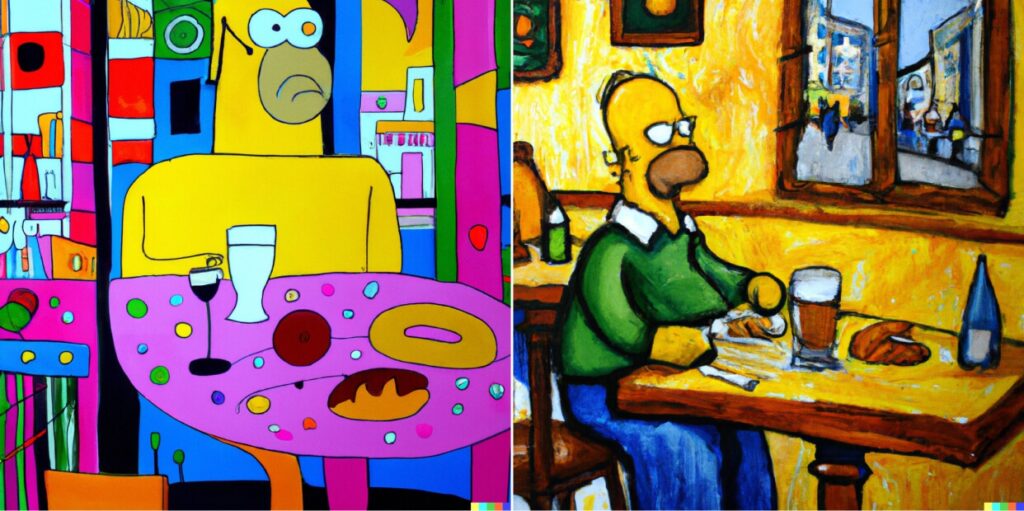

Just use natural language to combine any of the above to help describe what you want such as “Homer Simpson in a Parisian cafe in the style of Picasso” and within seconds you’ll get several generations.

How does DALL·E 2 work?

I won’t pretend I totally understand or will even try and explain exactly how DALL·E 2 AI image generation works – there are plenty of articles that do this. But what I can say is that it’s much more than a smart algorithm using a complex set of logic. It’s machine learning. An artificial intelligence that has been trained, over time, from billions of source images and natural language and the relationship between both.

At its heart is a diffusion model that starts with random noise and that is iteratively refined over multiple cycles until something resembling the AI’s understanding of the requested prompt emerges.

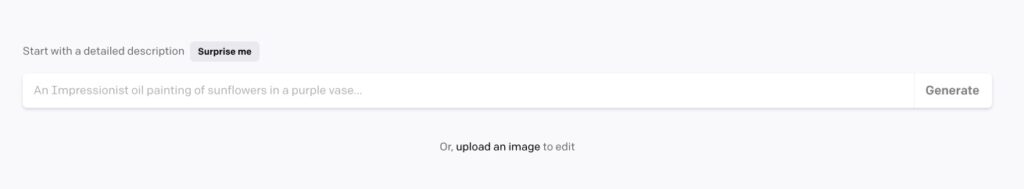

The DALL·E 2 tool itself is straightforward to use. Once logged in, you’re greeted with a simple input field where you can start generating images based on a text prompt or you can upload your own image to edit.

A number of image editing tools become available to both generated and uploaded images which we’ll explore later in this article.

Using DALL·E 2 for image sourcing

One of the most obvious uses of AI-based image generation is sourcing photos or other image styles to use for blog posts, presentations, websites, adverts and various other mediums. Stock photo libraries such as ShutterStock, iStockPhotos or Unsplash are often popular choices when it comes to image sourcing, but we will likely see people increasingly turn to the likes of DALL·E 2 for a faster and lower cost alternative, as well as the creation of imagery that is truly unique and doesn’t exist anywhere else online.

DALL·E 2 can be especially useful when sourcing imagery that has a very specific subject matter such as a “Golden Retriever sat on a beach looking out towards a sunset” or a “Photo of a fox jumping through bluebells in a woodland with the sun shining through trees”. Sourcing similar images from various stock photo libraries would likely take longer, and in many cases the subject matter is unlikely to exist.

What I find really astonishing with the photographic style of image generation is that DALL·E 2 can accurately replicate all kinds of environmental settings. From the glare of sunlight and accurate casting of shadows through to sharpness of close up objects and gradual blurring of more distant elements. You can also include prompts for various camera based setups such as ‘Macro 35mm’, ‘Fisheye’ or ‘Lens Flare’.

When generating photographic quality images, I’ve observed more realistic generations from subject matters that are more common. There are many more photographs of dogs on beaches for example than there are foxes jumping in bluebells, so more content for an AI to draw references from.

One key limitation worth pointing out is that all generated images are limited to 1024 x 1024 pixels, so we won’t be generating photos for billboards any time soon…

Extending image dimensions

One of the more common uses I see for DALL·E 2 in the creative and marketing world is the enhancement and editing of existing images, rather than complete generation of new images. As a web developer, I commonly come across challenges where a great decent image is found but the ratio and dimensions cause poor crops when the image is uploaded due to the image container being a different ratio to the image.

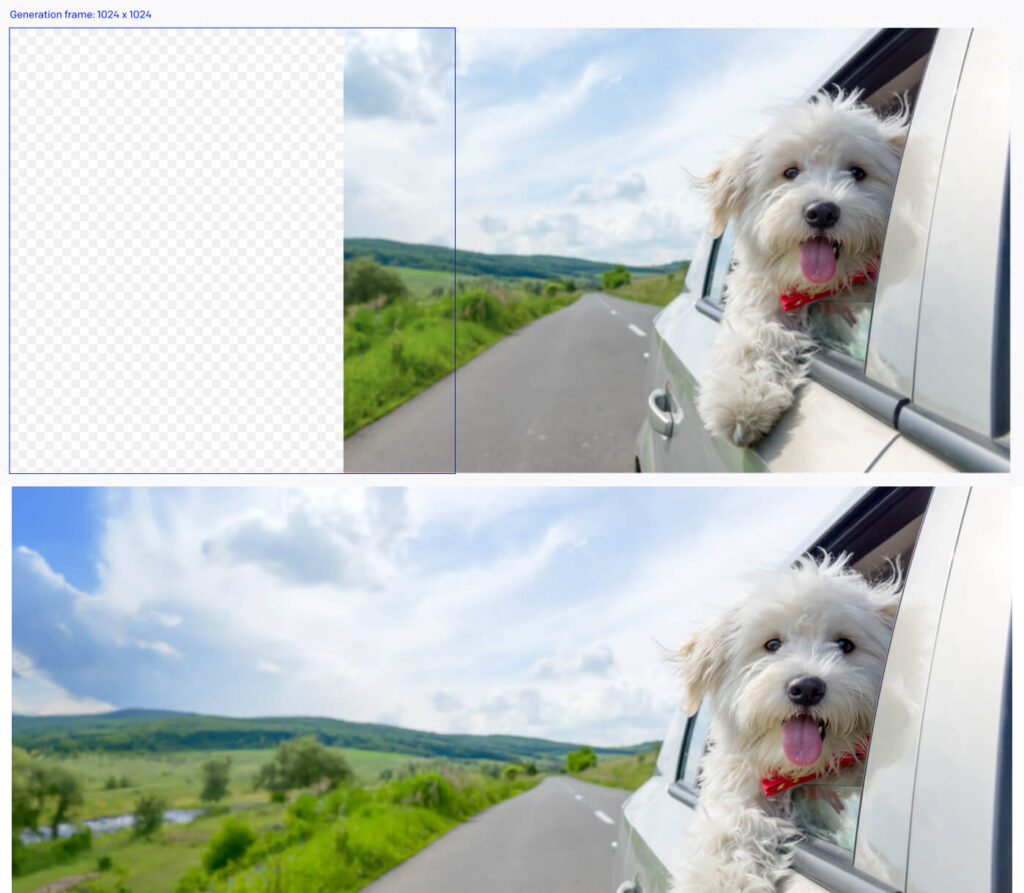

Here’s an example. A lovely stock image of a dog leaning out a car window that was earmarked for use on a featured hero unit, but the standard landscape ratio of the source photograph didn’t play nicely with a super-wide 21:9 ratio of a featured hero banner.

By uploading the image to DALL·E 2 and using the ‘Generation Frame’ tool, we can extend the image by letting the AI fill in the gaps. When using the Generation Frame, you’ll always want to maintain part of the original image within the frame to give the AI more information to work from.

The prompt text is also important here and usually you’ll want to describe what you want in the generation frame rather than the whole image. For this prompt, I just used “hills and sky” and let DALL·E 2 do the rest.

For every generation, DALL·E 2 will give you four variations for you to choose from. The image above I felt was the most believable; with a good extension of the road, a handful of generated trees, some interesting clouds without being overlay dramatic and a stream (which I didn’t ask for but a good addition) running behind the trees.

Editing out artefacts

Making edits to problematic parts of images can also be done quickly and efficiently using DALL·E 2. The below example was a recent request we had to replace a distracting reflection in a construction manager’s safety glasses.

Using the Eraser tool to edit out the reflection part of the image shown in the top left, along with supplying the prompt “Woman with safety glasses looking at iPad”, DALL·E 2 generated the rest, right down to the the rim and highlight of the glasses, shading and accurate colour matching.

Adding content to an existing image

Likewise adding elements to an image can be just as easy as removing them. Here’s an example of a photo of a person looking out across a valley. Wouldn’t it be nice if their best friend was beside them? No problem, just erase a dog sized space from the image using the Eraser tool and give an appropriate prompt; “A golden retriever sitting next to the woman looking out into the distance” was used in the example below

Generating visually similar images

Maybe you’ve found a really good image in terms of composition and subject matter, but for whatever reason it just doesn’t quite work. Without making any edits at all or changes to the prompt text, you can use the ‘Generate variations’ feature to create stylistically and compositionally similar images.

In the example below, the lighting, shading, camera angles are all very similar and the same type of dog is re-generated, along with a woman wearing a jacket looking out into the hills with a road weaving through them but the elements have all changed; the hills and road are new and the woman and dog are both different.

Creating Mood Boards

During early stages of a creative project, Mood Boards are often use to set visual style, tone and creative direction by sourcing a mix of existing screenshots, text and imagery. As you can prompt DALL·E 2 for any kind of visual style from pencil drawings and paint through to pixel art and 3D renders, generative AI may provide a good starting point to set a visual tone or direction.

Supporting brand rollout

When rolling out a new brand, it’s common to mock up how the new designs will look across different stationary items, or visualise website designs in situ on different devices. DALL·E 2 maybe able to support generating a unique backdrop that ties in with the client’s business.

For example, generating an image of business cards in a wildflower field for an eco orientated brand, or mocking up a laptop with a modern house in the background for a business in the house building sector.

Getting inspiration

I think we’re still a long way off from generating print ready or fully designed visuals for creative rollouts, but one area where DALL·E 2 could be useful to support the design process is to provide some quick inspiration to get the creative juices flowing.

For example, I generated the below in an attempt to generate a logo for a new steampunk-themed ale using the prompt “a logo design for a steampunk Ale”. While DALL·E 2 is great at understanding text prompts, text generation is one area where DALL·E 2 struggles. In all variations produced, text is either eligible or nonsensical. Despite that, I think there’s still potential for inspiration to be drawn from these types of generations whether it’s colours, composition or elements used within the images produced.

Producing rough layouts

Another area where DALL·E 2 might be able to support is with sourcing rough layouts and compositions that can be used as an idea or starting point for the likes of adverts and website designs. The examples below for a website design and brochure advert were based on prompts “A [website] [brochure] design for a sustainable energy company”

With machine learning and the understanding of billions of source images, DALL·E 2 will naturally incorporate standard conventions when it comes to layouts. With the website design example, the main navigation is shown at the top for all variations, the brand logo was usually in the top left and there was almost always a large hero unit and large headline below below the header followed by paragraphs of text below. Likewise green was a common colour theme with these variations as it naturally has a strong association with the word ‘sustainability’.

The text is notably illegible and many of the imagery within the layouts is obscure, but from a compositional point of view, I think there’s enough of a potential here for DALL·E 2 to take a supporting role when looking for inspiration for layouts.

Conclusion

OpenAI’s DALL·E 2 and the advancement we now see with AI-based image generation models such as Google’s Imagen (not yet for public consumption) are undoubtedly impressive, and I see their role in the digital marketing and creative space being much more of a supportive one rather than a direct threat to creative and marketing based roles. As we’ve seen with some of the examples above, there are some obvious limitations especially around output size and text generation. Even with most of the photography based images used in the article, most people would probably be able to tell that most are not real photos, but this will become increasingly harder to spot over time.

We’re still in the early stages of AI image based generation, but I see many useful applications for the creative and marketing sector from generating inspiration and ideas, through to image sourcing and advanced image editing. It won’t be long before API’s for these tools become available and embedded with popular design tools such as PhotoShop or Figma. It’s a fascinating field, and I’ll be following its progress over the coming months and years.